The last place anyone wants to be during a pandemic is a crowded subway car. In today’s urban environment, one of the most convenient socially distant transportation methods is traveling via shared scooters & bikes, otherwise known as micromobility. Despite a new calling to solve the massive issue of social distancing during transportation, micromobility has a few kinks to sort out before becoming the completely safe form of travel we need it to become.

In late 2019, before COVID-19, the City of San Francisco announced that at some point, in order to ensure pedestrian safety, companies who wish to obtain the new Powered Scooter Share Permits will be required to implement deterrent measures for customers who ride on sidewalks. In our current COVID-19 climate, a collision between a scooter and a pedestrian risks not only injury to both parties, but also higher risk of being exposed to infection.

Proactively detecting if a customer is riding on a sidewalk and providing immediate feedback can directly improve how shared bikes and scooters coexist in daily life with pedestrians, would serve to increase adoption, and could potentially save lives.

Although it might seem somewhat simple to detect whether a scooter or bike is riding on a sidewalk, it’s actually a complex engineering problem, perfect for a couple of engineers with a little extra time on their hands! In this post, we will be exploring some of the work we did to investigate the nature of sidewalk detection, as well as how we successfully developed a system that can detect if a scooter is currently traveling on a street or a sidewalk.

There were a number of different potential approaches to solving this problem. We set out to design a solution that would fit the very specific needs of detecting sidewalks on shared micromobility scooters.

From a practical level, our first question was: “how do we know when we’re riding on a street or a sidewalk?” In our experience, when on a scooter we are able to identify the type of terrain we are riding on primarily through spatial awareness and sight, but we’re also able to sense a difference in the vibrations we feel as we ride. Both visual and tactile sensations are great candidates for translation into machine learning.

When thinking about detecting terrain through sight, there were a number of obvious paths utilizing computer vision. This approach would have required the use of cameras, a technology which hasn’t made a significant appearance in shared micromobility hardware to date. That’s not to say cameras will never appear on scooters, however, for this problem the hardware design work required to add a camera to a scooter could quickly become the most costly aspect of this project. Computer vision also requires a much higher computational capacity than is currently available on board a scooter. Real time classification would either require utilizing IoT bandwidth to upload camera data to a cloud based machine learning (ML) system, or increasing the computational capacity of the scooter.

Ruling out a visual method left us with our second identified method of sensing the difference between terrains: vibration. Inside the existing IoT units of many scooters already exists an Inertial Measurement Unit (IMU) which can measure vibrations as a scooter travels over any terrain. IMU data is much smaller than camera data, and generally takes a fraction of the capacity required to process on-device compared to image data. On-device inferences have the advantage of zero additional recurring network bandwidth and compute cost, the only increased operating cost would be the power consumption of evaluating the incoming dataset. An on-device method is the most consistent and reliable way to classify terrain in real-time. While cloud-based inferences have significant computational capacity, inferences that run on-device don’t need to wait for a network call that is subject to variable network latency and availability.

Another important factor was the size of the dataset required in order to implement sidewalk detection, as well as the data collection efforts necessary to implement the technology on a wider scale. We believed that the sidewalk-detection solution would benefit from the ability to be retrained with new data quickly, and also potentially with a wide variety of data (since there is such variation in sidewalk types).

When taking all these factors into consideration, an on-device machine learning inference appeared to be best suited.

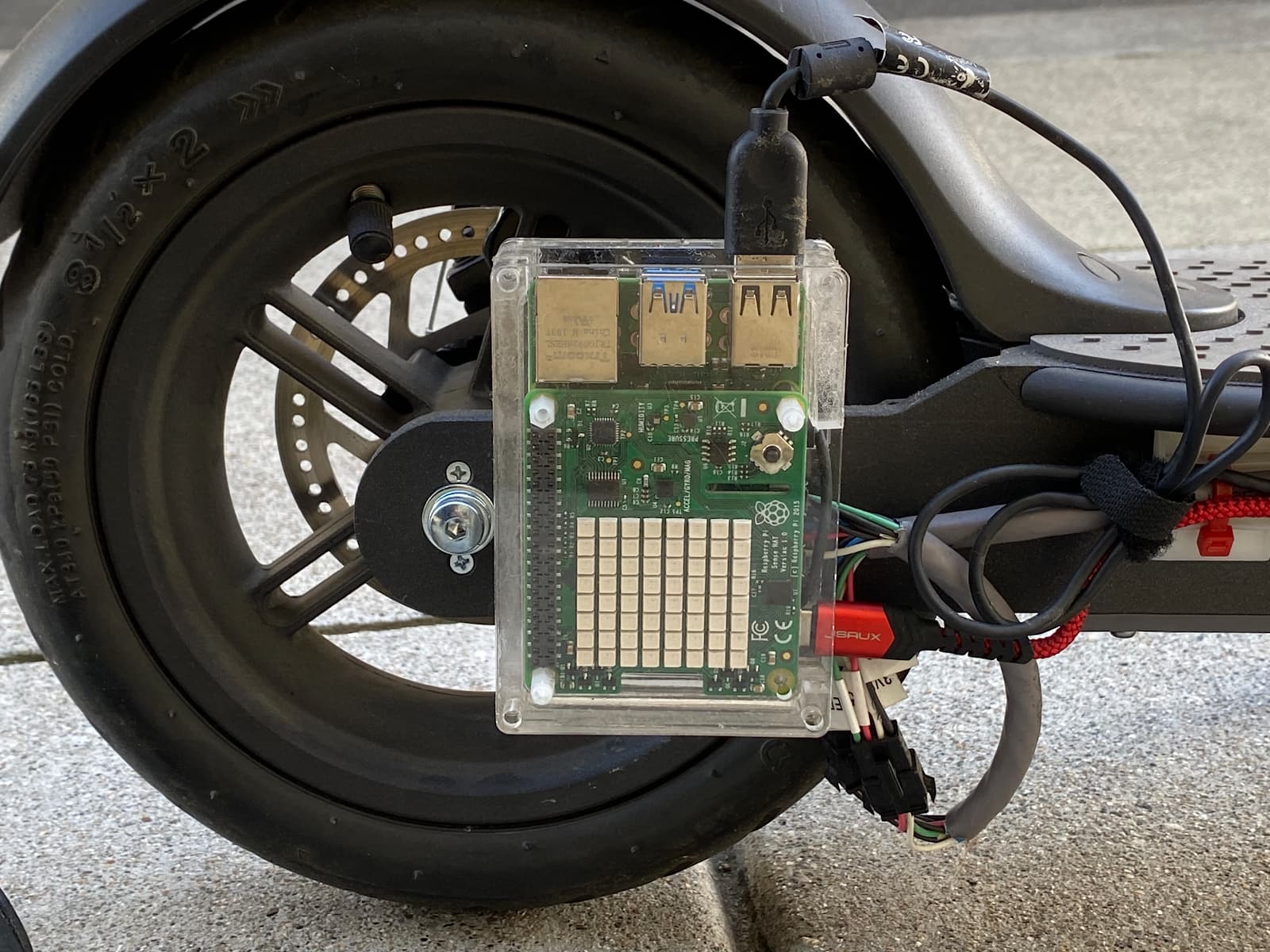

Our next step was to build a prototype platform. We bought a common off-the-shelf scooter and mounted a prototype board with an IMU to the rear hub. A camera was attached, along with a few switches and some LEDs. We then designed and 3D printed parts to attach the switches, and laser cut a case for the MCU + IMU.

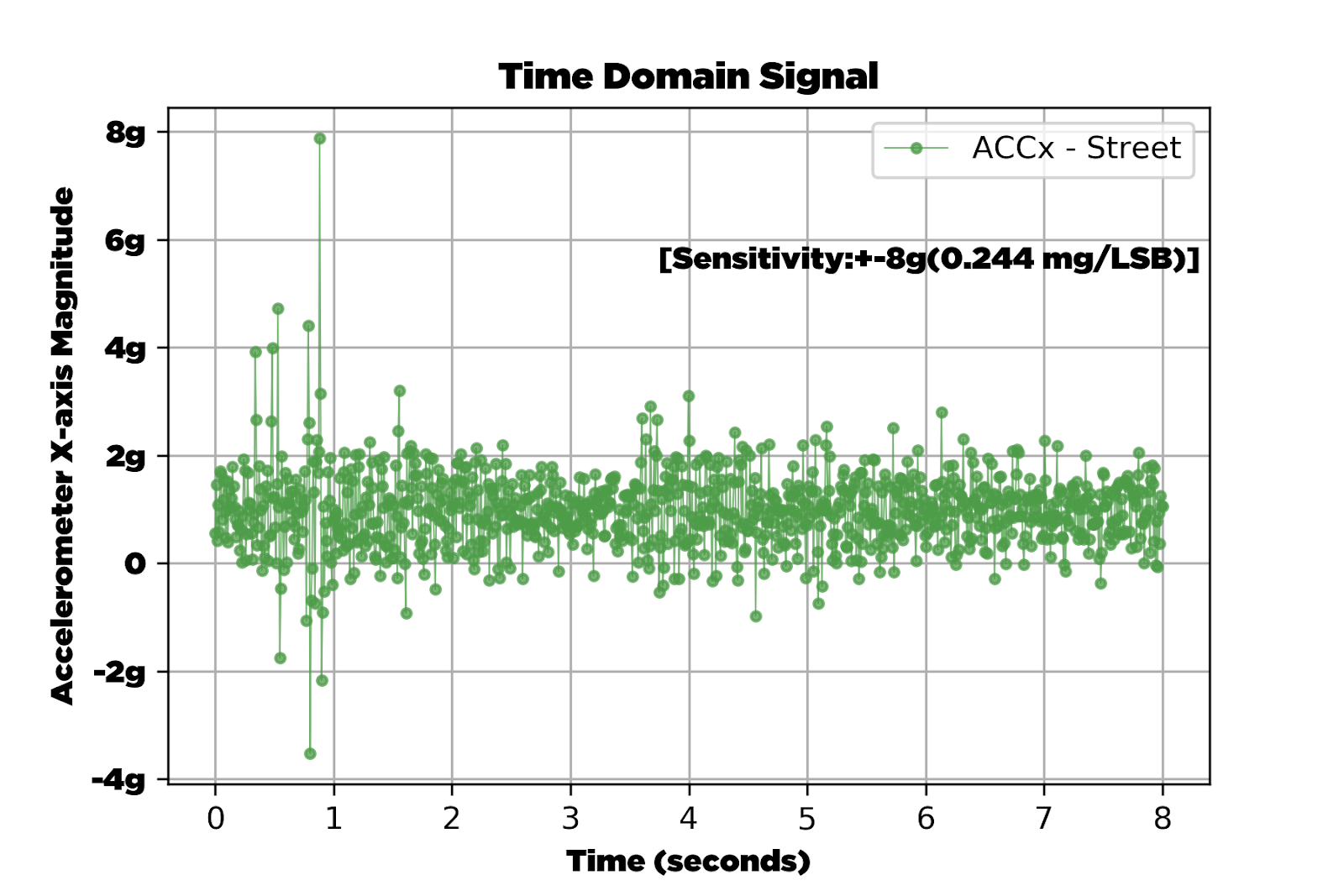

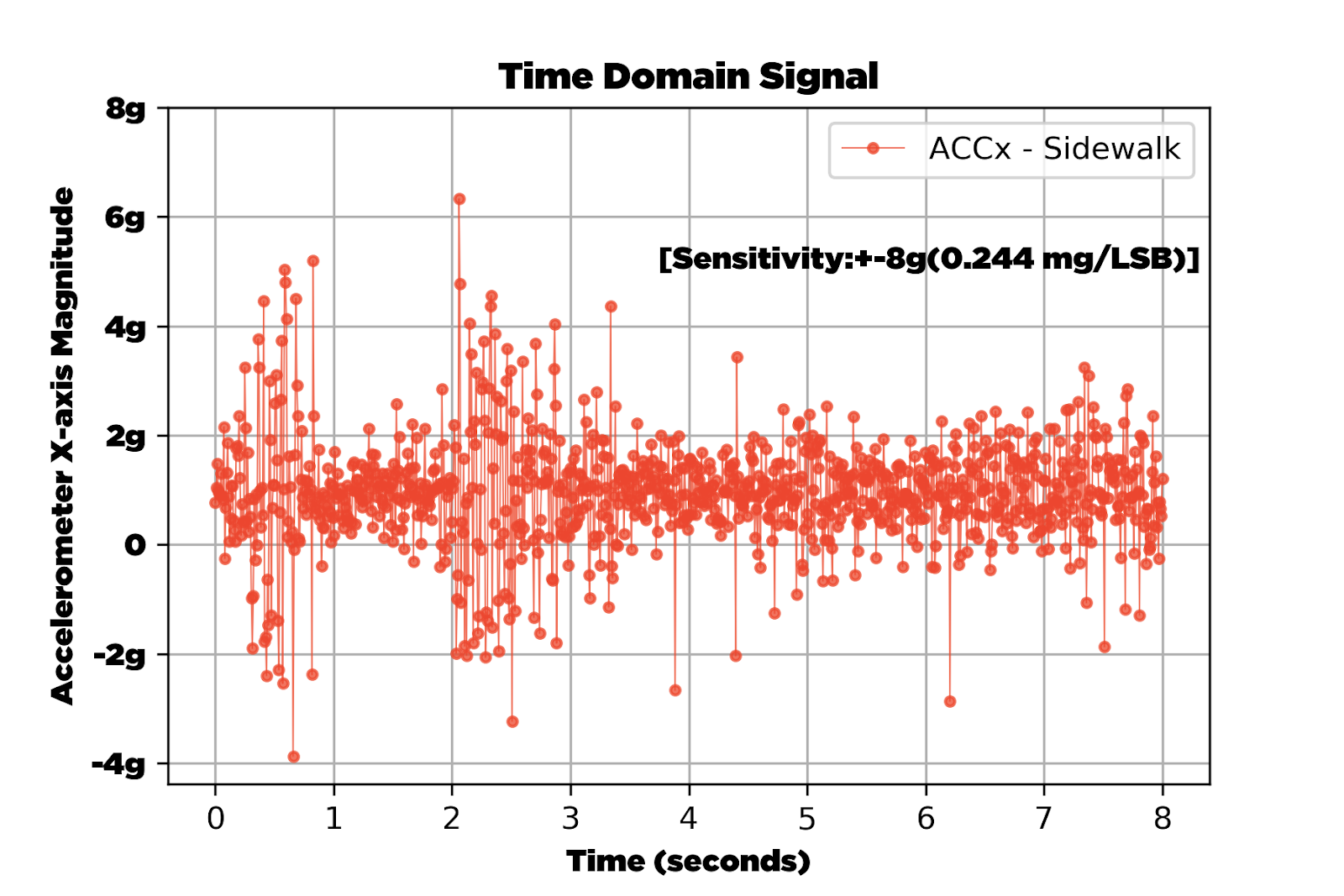

While gathering data we used a switch mounted on the handlebars of the scooter to manually indicate if we were riding on a street, or a sidewalk. This label was recorded alongside raw IMU data, which output an X, Y, and Z axis for an accelerometer, a magnetometer, and a gyroscope respectfully.

In order to reduce the complexity of our initial analysis, we constrained our data gathering locations to a single street and sidewalk combination. We selected a block in San Francisco which had little to no damage and appeared representative of many streets in San Francisco.

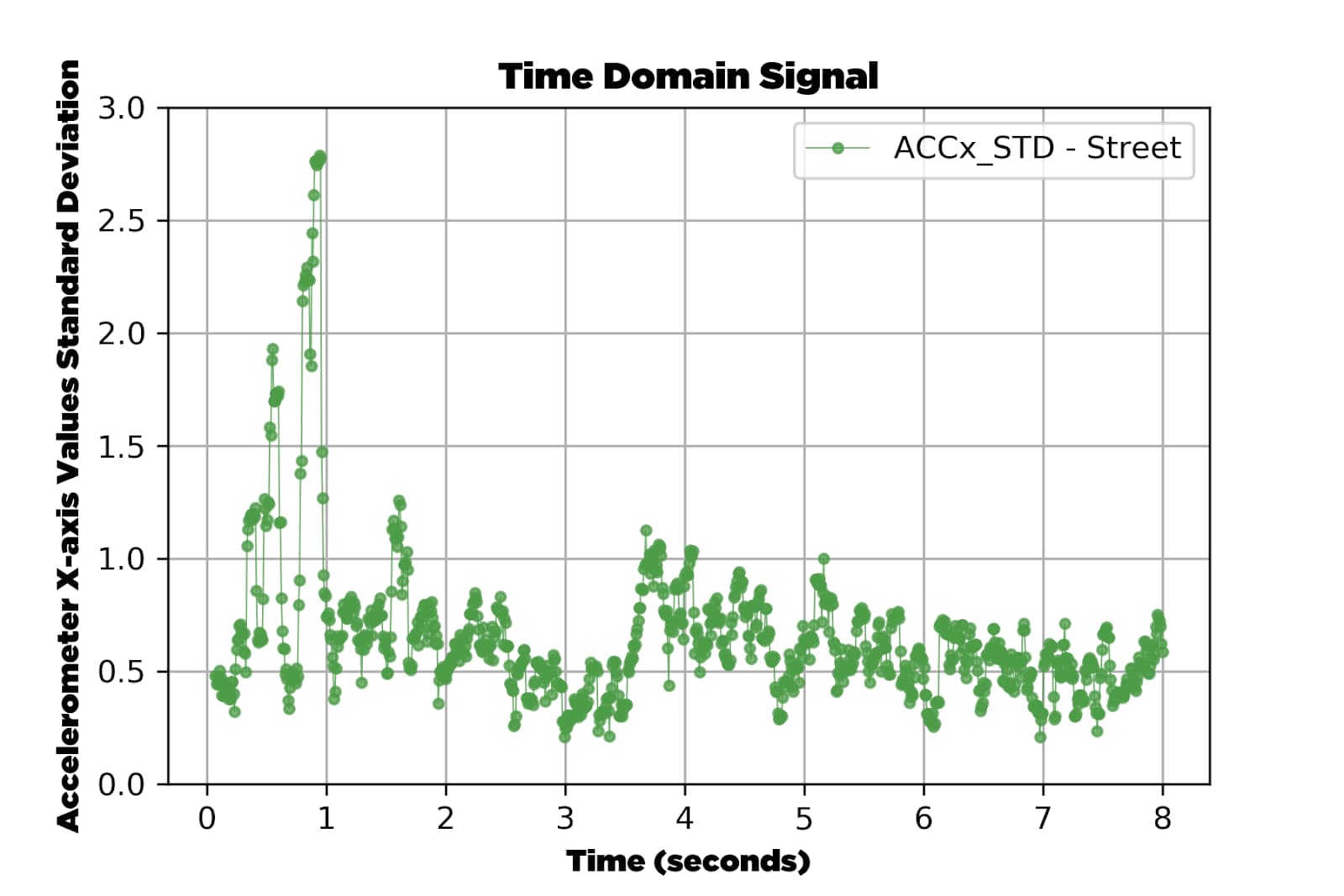

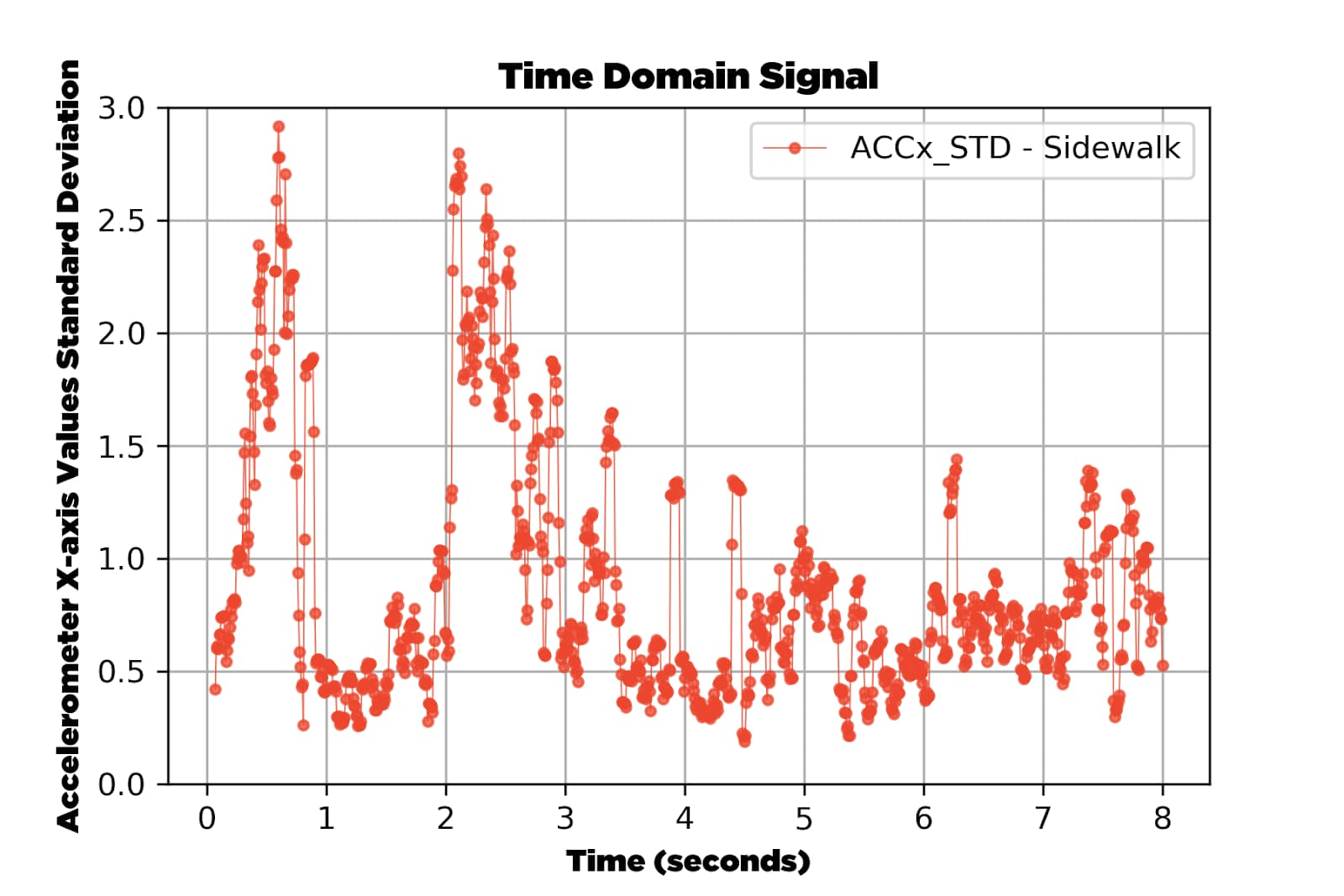

Once the data started rolling in, we observed peaks in certain accelerometer dimensions that appeared to correspond to vibrations experienced when riding over the contraction joints present on the sidewalk; features that exist with significantly lower frequency in the corresponding street waveforms.

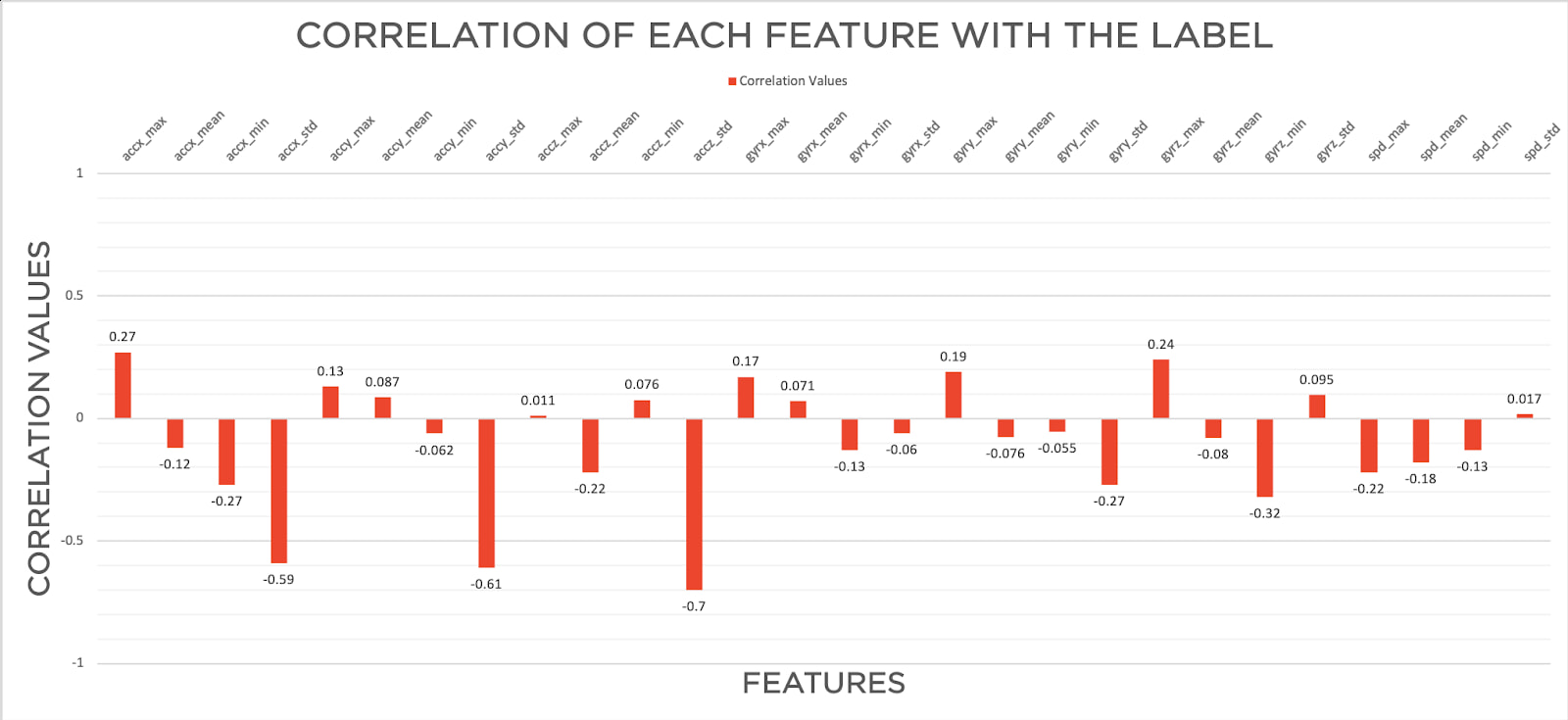

With these considerations in mind, we investigated numerous models in order to achieve the best accuracy, but feature extraction proved to be a challenging problem. We consulted with some of our ML experts in order to hone in on which features to extract and how to extract them from the time series data. Our working theory was that we could extract key indicators of these features by performing statistical analysis functions on our raw data samples.

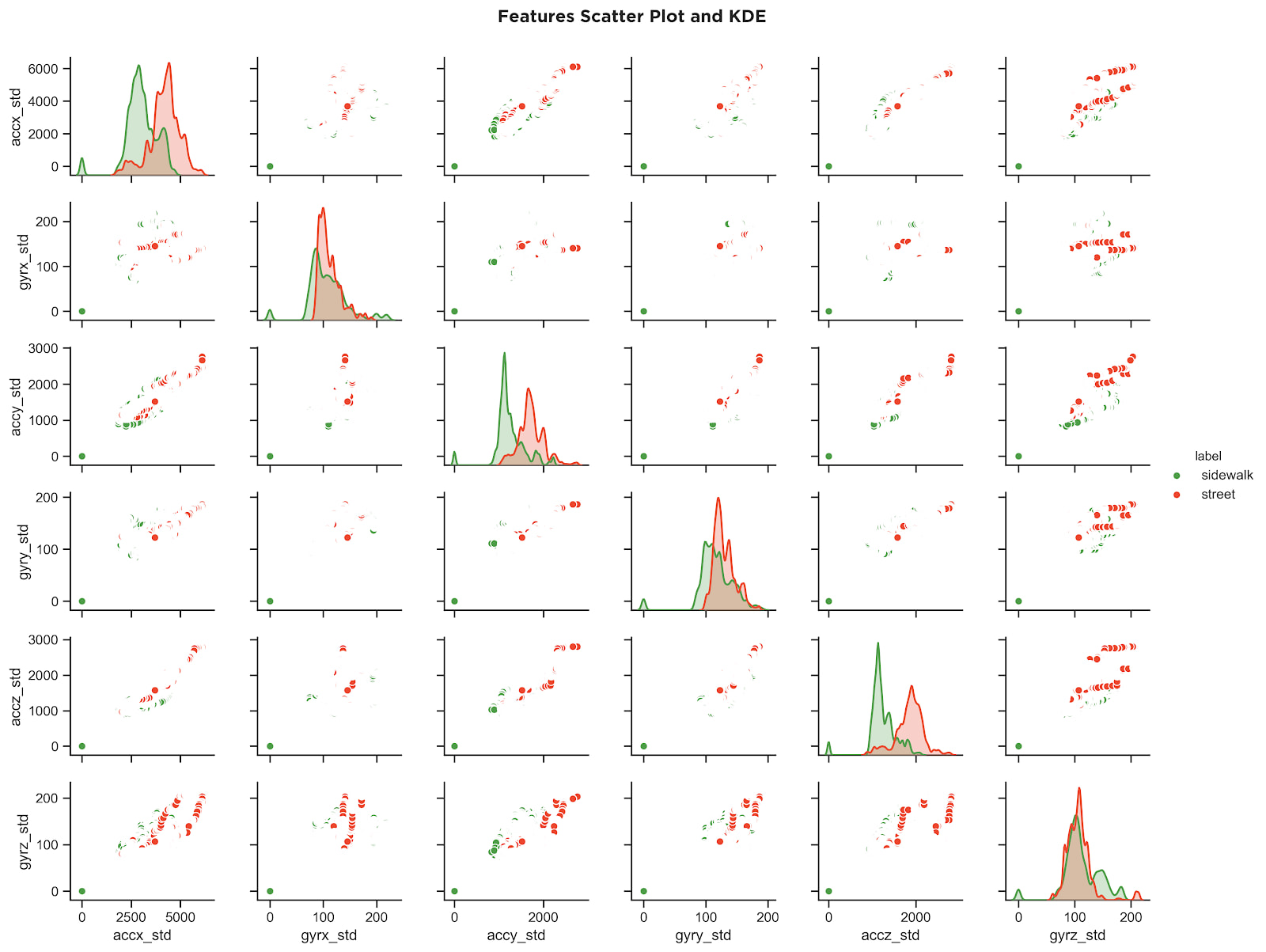

When we compared metrics such as mean, extents, and standard deviation with their correlation to the recorded label, we discovered a distinct relationship between the computed standard deviation of accelerometer values, and a difference in the terrain.

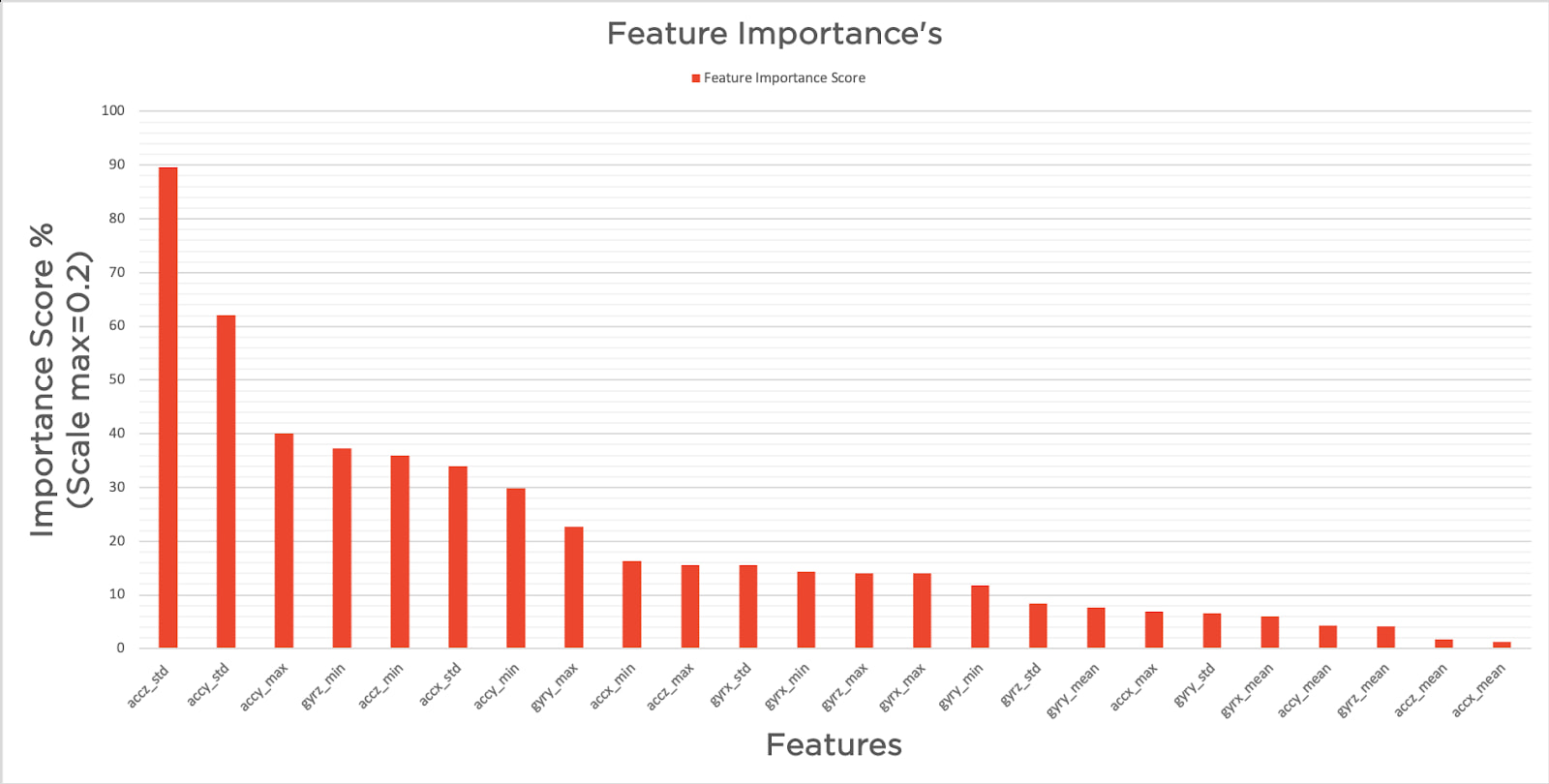

Rather than using a traditional neural network, we decided to proceed with Random Forest Classification to generate our model. A Random Forest is what’s known as an ensemble algorithm; it is a collection of many individual decision trees that are created at the time of training, producing a prediction by means of a majority vote. Random Forest Classifiers are significantly more computationally efficient when compared to neural networks, making them well suited to the limited computational and power resources available on a scooter.

We designed a Random Forest Classifier to be trained on 21 different pre-processed metrics taken from our training dataset. Once we were able to process the correct metrics over an appropriate moving window, we were able to improve our model’s accuracy to 94.5% on our test dataset.

With a model designed, and tested without test data to high accuracy, it was time to evaluate the model with real world data in real time!

We modified our sensor platform to take incoming readings from the IMU connected to our MCU, and execute the Random Forest Classification on processed samples to produce results in real-time. We configured an LED light to give live indication of the sidewalk versus street classification as it was running. We were able to detect the terrain after a transition within 3 seconds. The output was not entirely stable. However, the false positives and negatives arrived at an extremely low rate, and with a moving window, these errors mostly averaged out over time.

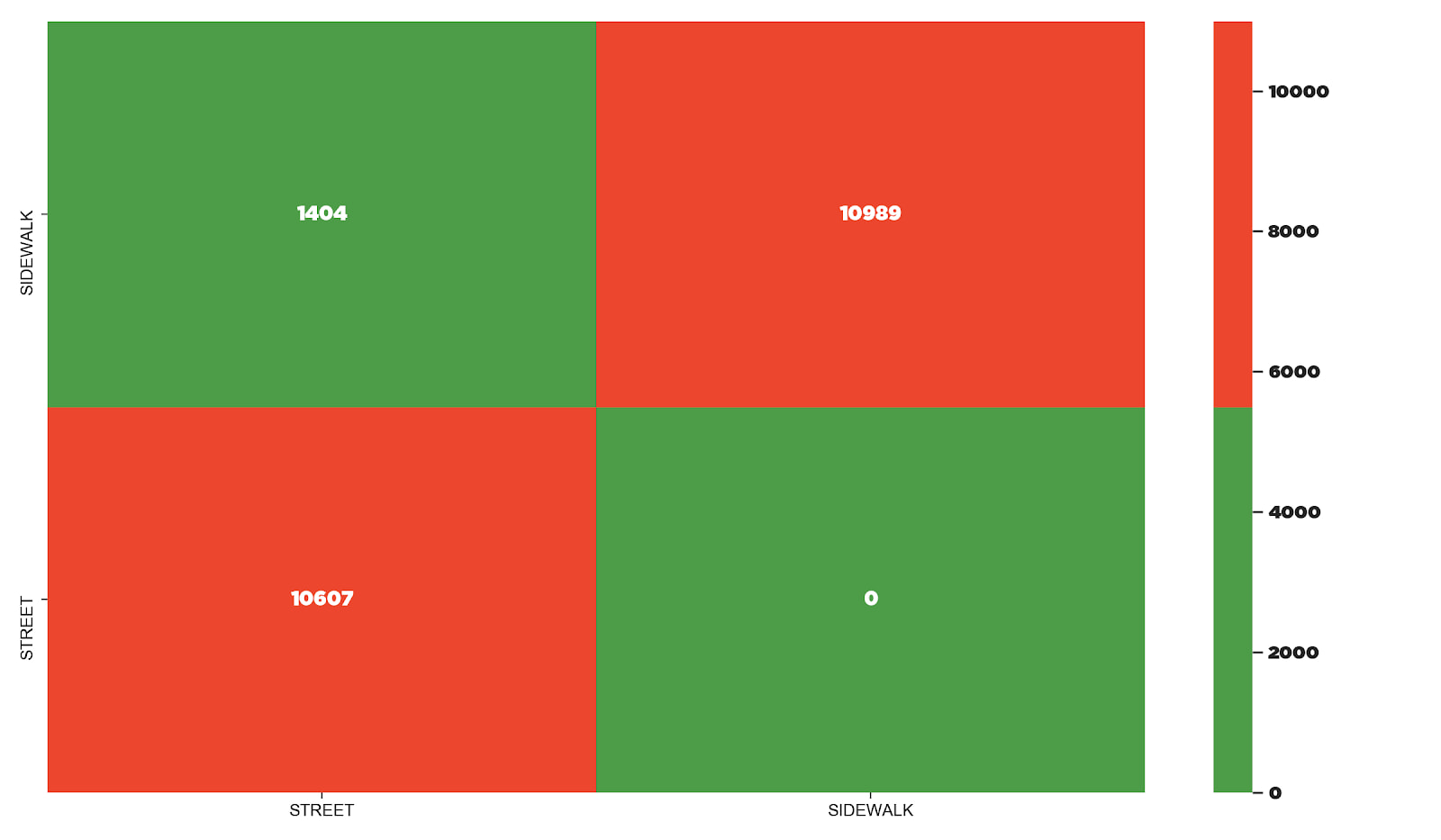

We did have some concerns about overfitting, and were unsure of the accuracy of the model when tested on sidewalks and streets. However, after testing it, we were pleasantly surprised that our model worked on a wide variety of sidewalks. In the confusion matrix generated from the evaluation of the Random Forest model, we observed that all of our errors were isolated to false positives. We believe that this was likely due to overfitting because of the nature of the terrain differences, the nature of our analysis of this data, and the limited dataset we gathered for this exercise.

This project provided us with a good deal of information regarding the nature of terrain classification, and also about the nature of classifying IMU data. We believe that with additional work in developing algorithms to further isolate the exact features we are looking for, we could further increase the accuracy performance of the model. Additionally, if this type of solution is deployed to a fleet of micromobility devices, once a baseline of accuracy is assessed, techniques could be used to automatically improve model accuracy and response time using data collected from actual customer rides.

We also proved the ability to glean significant insights into what is happening on a scooter and what situation a scooter is in by analyzing and classifying simple sensor data. With some creative modifications, this technology could be adapted to detect user falls, vandalism, or otherwise aberrant treatment of a device.

New IMUs with ML cores such as the ST LSM6DSOX are arriving on the market and they are perfectly suited to applications such as sidewalk detection. These chips are extremely powerful, and can add significant capabilities with minimal added cost of goods sold and power consumption, although fully taking advantage of these new chips requires a certain level of expertise.

Finally, sidewalk detection is a particularly interesting issue to us because it arrives at the intersection of technology, mobility, and pedestrian right-of-way. This type of technology enables companies to glean insights into the behavior of customers—but, how they use the data is of great importance. Properly utilized, this new capacity opens up the ability to influence the behavior of customers, while also improving their well being. We want to ensure that we are helping make progress to positively influence each other and the society in which we live. Reducing the number of pedestrian + micromobility collisions is one step forward in that journey.